Last week I was working on a system design course where we were asked to build a real-time database. For simplicity we limit our scope to:

- persistent key value store.

- each client must have it’s own version of store.

- sync with events made by other clients.

Here is how I approached the problem.

V1: Simple KV store using a hashmap

Let’s start with a simple hashmap and build an abstraction over it.

type Store struct {

db map[string]string

}

func NewStore() *Store {

store := &Store{db: map[string]string{}}

return store

}

func (k Store) Get(key string) string {

return k.db[key]

}

func (k Store) Put(key string, value string) {

k.db[key] = value

}Now while this is a key value store it doesn’t. It doesn’t persist data, has no idea about other clients, so basically doesn’t solve our problem.

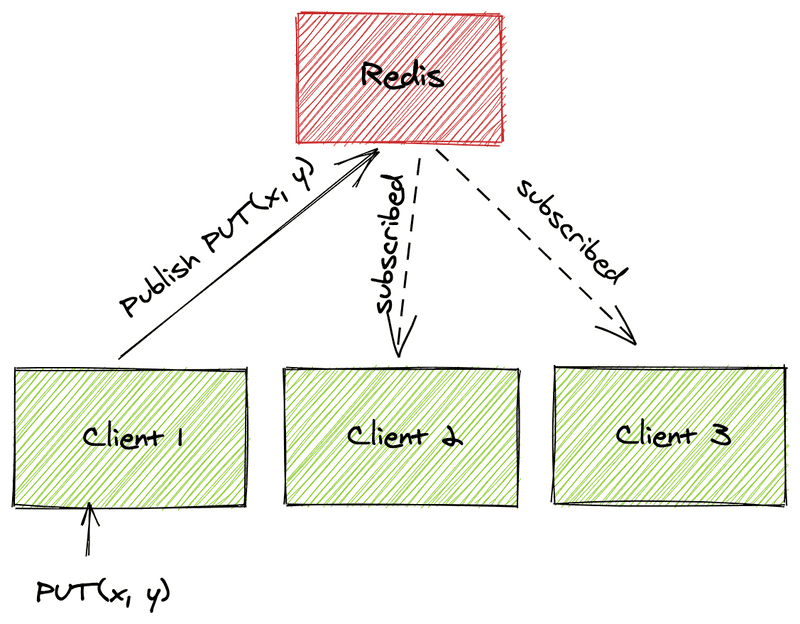

V2: Using redis as PubSub engine to sync clients

To sync data between clients we use redis’ pub-sub functionality. The idea is that every client would broadcast all PUT events. All other clients are subscribed to the same channel and would update their local database based on the events. We don’t do any conflict-resolution and just let the latest event win.

type Store struct {

db map[string]string

redisClient redis.Client

}

func NewStore() *Store {

redisClient := redis.NewClient(&redis.Options{

Addr: "localhost:6379",

Password: "",

DB: 0,

})

store := &Store{db: map[string]string{},

redisClient: *redisClient,

}

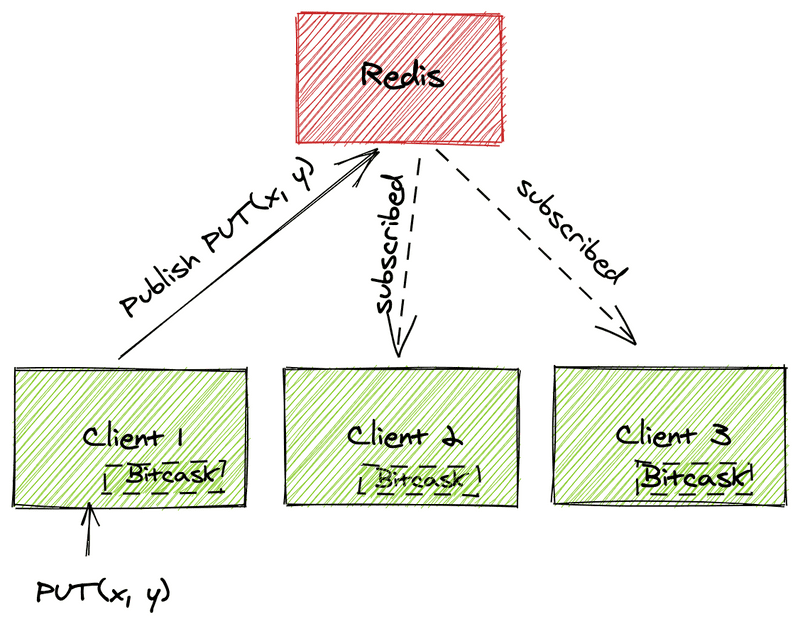

}V3: Persist data on each node for cold start

So far our clients have not been persisting data as we’ve only been working with in-memory hashmaps. Bitcask is an embedded key-value store. We use it to replace our in-memory hashmap. Bitcask enforces only one OS process can open the database for writing at a given time. So each of our clients need to have their own client IDs.

type Store struct {

db *bitcask.Bitcask

redisClient redis.Client

}

func NewStore() *Store {

redisClient := redis.NewClient(&redis.Options{

Addr: redisAddr,

Password: redisPassword,

DB: redisDB,

})

var dbFile = "/tmp/" + *clientId

db, err := bitcask.Open(dbFile)

if err != nil {

log.Fatal("Failed to open db " + err.Error())

}

store := &Store{db: db,

redisClient: *redisClient,

}

return store

}V4: Event buffer

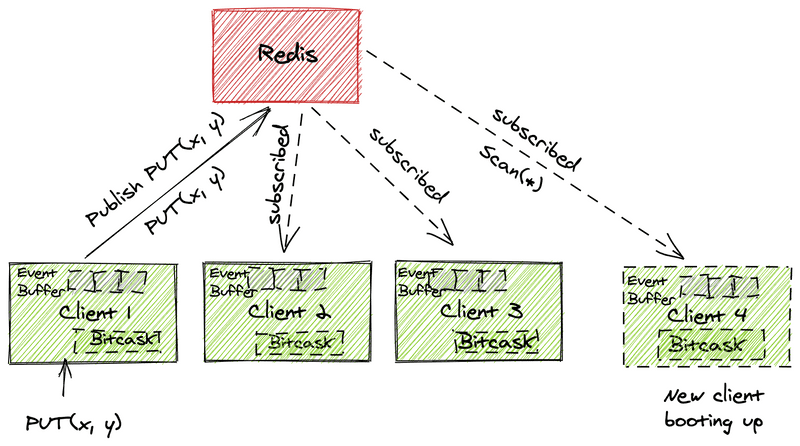

Now we’ve solved problems of keeping a local persistent copy of the data and also syncing events between clients. One thing that still is a problem is that of cold starts.

- We now solve it by persisting data both in bitcast and redis.

- Introducing an event buffer in all clients.

Once the client boots up it starts reading old data from the redis, it also subscribes to redis for new events and stores them in a buffer, once it’s done reading inital state from redis it starts processing new events.

type Store struct {

db *bitcask.Bitcask

redisClient redis.Client

buffer chan string

initDone chan bool

bufferSize int64

}func NewStore(redisAddr string, redisPassword string, redisDB int, redisChannel string, clientId string, bufferSize int64) *Store {

redisClient := redis.NewClient(&redis.Options{

Addr: redisAddr,

Password: redisPassword,

DB: redisDB,

})

var dbFile = "/tmp/" + clientId

bufferChan := make(chan string, 100)

db, err := bitcask.Open(dbFile)

if err != nil {

log.Fatal("Failed to open db " + err.Error())

}

store := &Store{db: db,

redisClient: *redisClient,

buffer: bufferChan,

initDone: make(chan bool),

bufferSize: bufferSize,

}

go store.loadData()

go store.subscribe()

return store

}There are still lots of room for improvements but so far this set up has been working fine for a simple application that I’m working on.

The source code is available on Github