Consistent hashing is a special type of hashing such that hash table is resized. To understand the problem consistent hashing solves lets consider a distributed cache which stores movie names and movie metadata.

Cache

class AbstractCache(ABC):

def __setitem__(self, key: T, val: T): ...

def __getitem__(self, key: T) -> T: ...Let’s implement a CacheNode using python’s dictionary as an implementation of this abstract base class. We can think of these as individual cache nodes part of a distributed cache

class CacheNode(AbstractCache):

def __init__(self, name: str):

self.cache = {}

self.name = name

def __setitem__(self, key: T, val: T):

self.cache[key] = val

def __getitem__(self, key: T) -> T:

return self.cache[key]Hashing

Hashing is the process of mapping a key to a hash.

In [2]: hash('The Shawshank Redemption')

Out[2]: -917102468363987049We can use the hash function and the number of servers to find the server id for a given key very quickly!

def get_server_id(key: str, N: int) -> int:

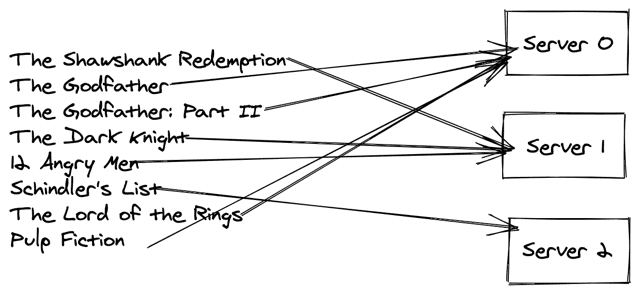

return hash(key)%NLet’s say we start with 3 servers

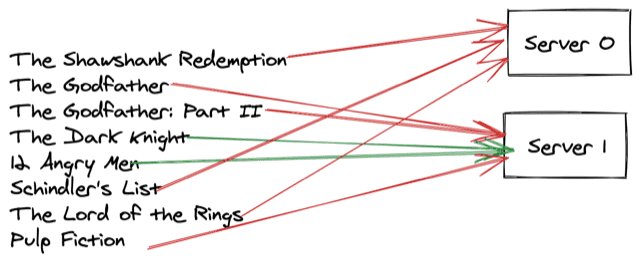

Now say one of the server crashes and we’re left with only 2 servers

As you can see once of of the servers go down we have to almost all of our keys point to different servers, case in point “The Shawshank redemption” which was assigned server 1 now points to server 2 and hence is marked with a red arrow in the diagram above. This is going to cause a lot of cache misses and defats the purpose of the cache.

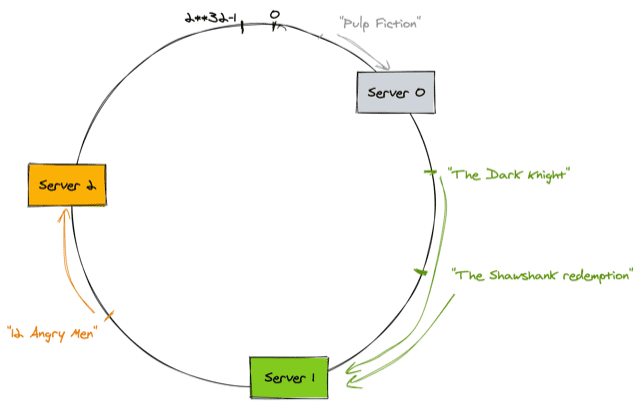

To work in an environment where servers keep coming up and down we need to come up with a scheme that rehashes only 1/nth keys instead of all the keys

Ideally adding or removing servers should only affect 1/nth of the keys.

Consistent Hashing

Consistent hashing provides a hashing scheme which doesn’t directly depend on the number of servers.

Md5 and Sha-1 are cryptographic hash functions and are computationally expensive for fast hashing. Non-cryptographic hash functions like murmurhash, xxhash, metrohash etc. are all good candidates. Following is an implementation of a DistributedCache that uses consistent hashing.

class AbstractConsistentHashing(ABC):

def add_node(self, node: CacheNode): ...

def get_node(self, key: str) -> CacheNode: ...

def del_node(self, node: CacheNode): ...

class DistributedCache(AbstractConsistentHashing, AbstractCache):

def __init__(self, n_replicas: int = 10):

self._nodes = {}

self._keys = []

self.n_replicas = n_replicas

def _hash(self, key: str) -> int:

m = md5(bytes(key, 'ascii'))

return int(m.hexdigest(), 16)

def _get_hashes(self, node: CacheNode) -> List[int]:

return [self._hash(f'{i}:{node.name}') for i in range(self.n_replicas)]

def del_node(self, node: CacheNode):

del self._nodes[node.name]

def get_node(self, key: str) -> CacheNode:

h = self._hash(key)

start = bisect.bisect(self._keys, h) % len(self._keys)

return self._nodes[self._keys[start]]

def add_node(self, node: CacheNode):

for h in self._get_hashes(node):

self._nodes[h] = node

bisect.insort(self._keys, h)

def __getitem__(self, key: str) -> str:

node = self.get_node(key)

return node[key]

def __setitem__(self, key, val):

node = self.get_node(key)

node[key] = val