Brendan Burns in his paper Design Patterns for Container-Based Distributed Systems lays out 3 single node patterns that are used in micro-services architecture. There is some documentation available with examples for implementing these patterns with Kubernetes. However, I couldn’t find a lot of examples with HashiCorp’s Nomad. My goal is to work through them with examples using Nomad as our job schedular. All the code used here is available in the Github Repository

Quick Introduction to Nomad

Nomad is: A simple and flexible workload orchestrator to deploy and manage containers and non-containerized applications across on-prem and clouds at scale. It uses HCL to specify jobs that can be submitted to the cluster. Each job consists of on or more groups and each group can contain one or more tasks. The tasks in the same group are co-located, i.e. placed on the same node.

job "example" {

group "example" {

task "example-service" {

#...

}

task "example-service2"{

#...

}

}

}This post assumes that you’re already familiar with Nomad, if not please check their Hashicorp’s amazing documentation for it.

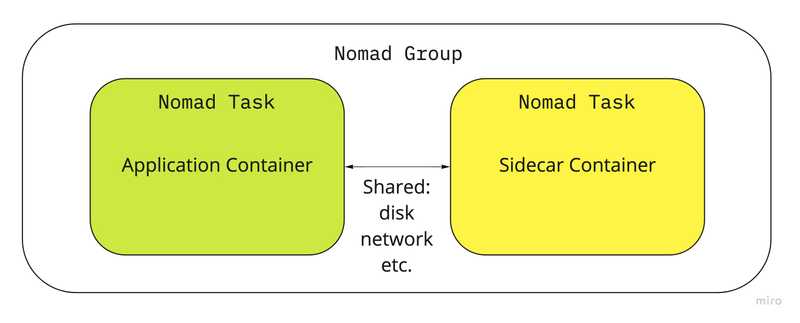

Sidecar pattern

The sidecar pattern is made up of 2 containers:

- the application container

- the sidecar containerThe role of the sidecar is to augment and improve the application container, often without the application container’s knowledge.

Sidecar containers are scheduled on the same host machine, which is possible using nomad’s group stanza.

The group stanza defines a series of tasks that should be co-located on the same Nomad client. Any task within a group will be placed on the same client.

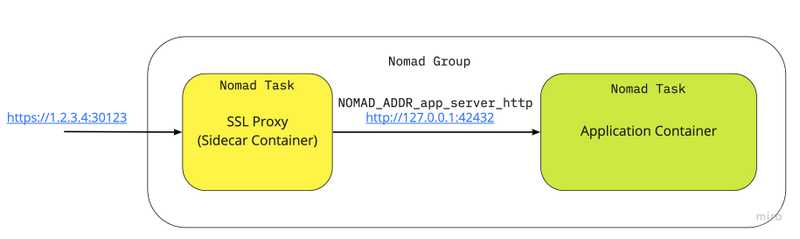

Example: SSL Proxy In this example we’re going to see how we can enable SSL for a legacy application. While we can modify the original application to add HTTPS handling, it’s much more convenient to just add a SSL proxy as a sidecar container. Here in this example we’re deploying our application which Hashicorp’s Echo Server with an Nginx server as a sidecar proxy which:

- Intercepts all incoming traffic

- Terminates HTTPS

- Proxies the traffic to application container

To enable this we create a new task called ssl-proxy-sidecar which mounts the SSL certificate and the SSL certificate key. It also proxies all traffic to NOMAD_ADDR_app_server_http which is how nomad makes the application container’s HTTP port available as an environment variable.

A working code example is available here.

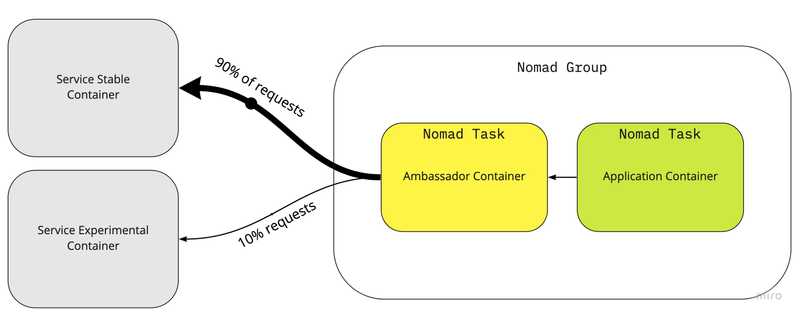

Ambassador Pattern

The ambassador pattern uses an auxiliary container to act as a broker between the application container and the outside world.

Example: Request Splitting or A/B Testing In many production environments while rolling out new updates it’s often beneficial to have a fraction of all requests to the new updated service. A common testing method is A/B testing – also known as split testing – in which a (usually small) proportion of users is directed to the new version of an application while most users continue to use the current version.

In our example Nomad file we have service.nomad which contains 2 tasks: service-experimental and service-stable for the respective experimental and stable containers. Both of our services are different versions of the echo server.

In another Nomad file app.nomad we have our request-splitter task which is an Nginx server acting as the request splitting ambassador for our main task which is app. Here’s a snippet of the Nginx config to act as request splitting ambassador:

http {

upstream app_stable {

{{ range service "app-server-stable" }}

server {{.Address}}:{{.Port}} {{end}}

}

upstream app_experimental {

{{ range service "app-server-experimental" }}

server {{.Address}}:{{.Port}} {{end}}

}

split_clients "${remote_addr}" $appversion {

90% app_stable;

* app_experimental;

}Code example is available on Github. In the code example you’ll notice the application container is left blank but the idea would be that the application container would use the ambassador container to reach out to the external service instead of reaching out directly. I might implement a simple application and update it in the future.

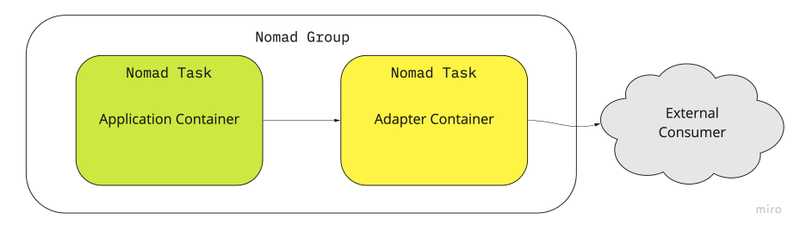

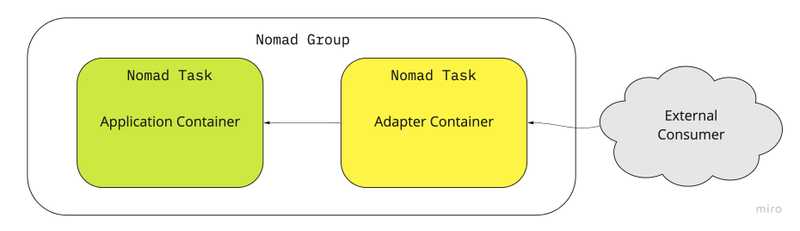

Adaptor Pattern

The adaptor pattern is used to modify the interface of an existing application container to some other interface without modifying the application container. This is widely used in software engineering (Gang of Four) patterns for classes and objects but here we’ll be using it in the context of containers.

Example: Monitoring with Prometheus Prometheus is an industry standard monitoring system and time series database. In this example we’ll see how we use the adaptor pattern to add telemetry to our existing application container without modifying the application. Prometheus expects applications to expose a metrics endpoint which servers the metrics in a given exposition format. In our previous examples we’ve deployed Nginx as our auxiliary container however here we’ll treat Nginx as our application container. We’ll be using the nginx exporter container as our adapter to convert nginx metrics to prometheus.

We define the following task for the nginx exporter to act as our adaptor for prometheus. Now in prometheus config we can point it to scrape the adaptor container which returns metrics in a format prometheus understands.

task "adaptor-nginx-exporter" {

driver = "docker"

config {

image = "nginx/nginx-prometheus-exporter:0.8.0"

args = [

"--nginx.scrape-uri", "http://${NOMAD_ADDR_service_nginx_http}/status"

]

port_map {

http = 9113

}

}

resources{

network {

mbits = 10

port "http" {}

}

}

}